We’ve filed lawsuits challenging ChatGPT, LLaMA, and other language models for violating the legal rights of authors.

Because AI needs to be fair & ethical for everyone.

This is Joseph Saveri and Matthew Butterick. In November 2022, we teamed up to file a lawsuit challenging GitHub Copilot, an AI coding assistant built on unprecedented open-source software piracy. In January 2023, we filed a lawsuit challenging Stable Diffusion and Midjourney, AI image generators built on the heist of five billion digital images.

On behalf of seven wonderful book authors, we’ve filed four class-action lawsuits—against OpenAI, Meta, NVIDIA, and Databricks—challenging the legality of large language models trained on copyrighted works without consent, compensation, or credit.

It’s a great pleasure to stand up on behalf of authors and continue the vital conversation about how AI will coexist with human culture and creativity.

The plaintiffs

Our plaintiffs are accomplished book authors who have stepped forward to represent a class of thousands of other writers afflicted by generative AI.

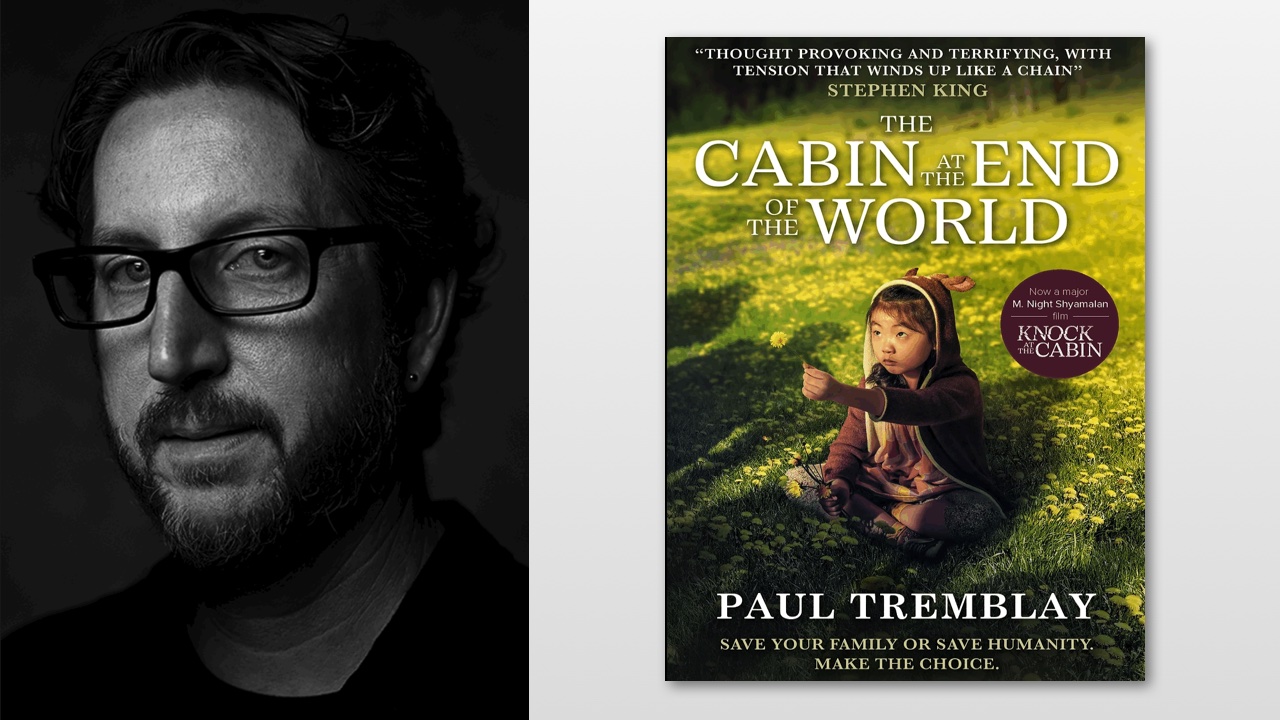

Paul Tremblay

Paul Tremblay has won the Bram Stoker, British Fantasy, and Massachusetts Book awards and is the author of Survivor Song, The Cabin at the End of the World, Disappearance at Devil’s Rock, A Head Full of Ghosts, the crime novels The Little Sleep and No Sleep Till Wonderland, and the short story collection Growing Things and Other Stories.

His essays and short fiction have appeared in the Los Angeles Times, New York Times, Entertainment Weekly online, and numerous year’s-best anthologies. He has a master’s degree in mathematics and lives outside Boston with his family.

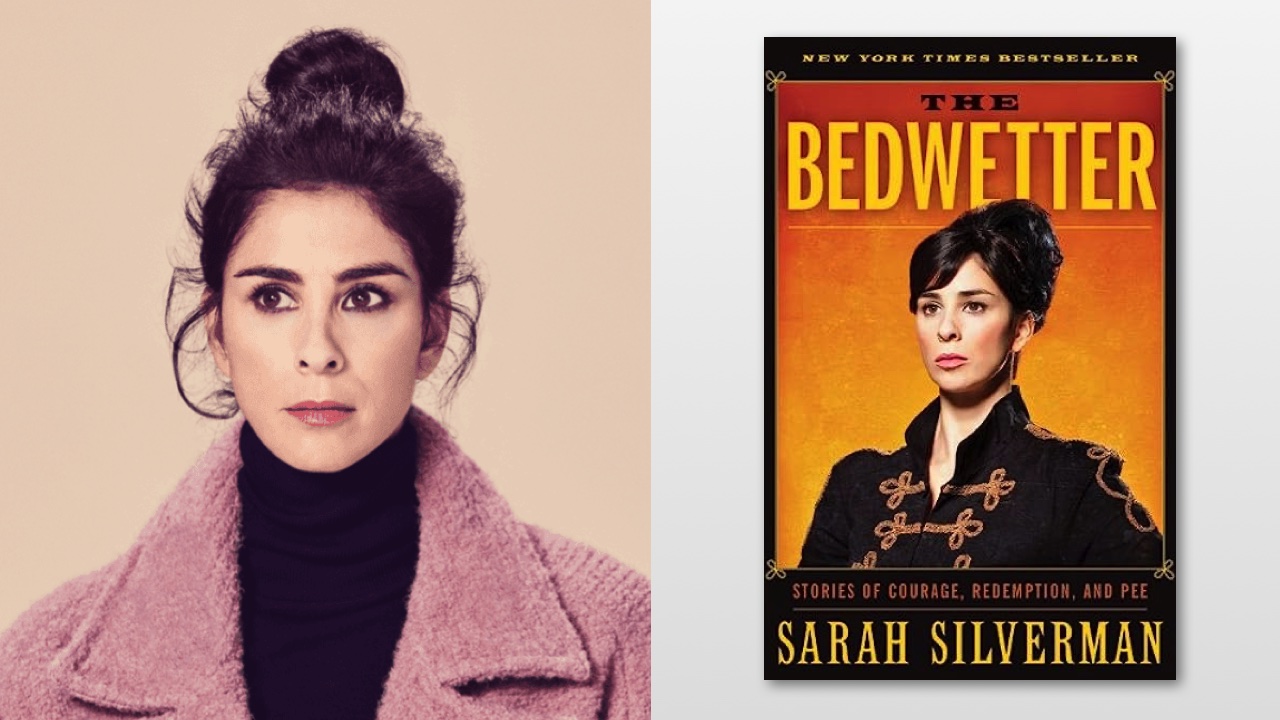

Sarah Silverman

Sarah Silverman is a two-time Emmy Award-winning comedian, actress, writer, and producer. She currently hosts a critically acclaimed weekly podcast, The Sarah Silverman Podcast. She can next be seen as the host of TBS’ Stupid Pet Tricks, an expansion of the famous David Letterman late-night segment. In spring 2022, Silverman’s off-Broadway musical adaptation of her 2010 New York Times bestselling memoir The Bedwetter: Stories of Courage, Redemption, and Pee had a sold-out run with the Atlantic Theatre Company.

On stage, Silverman continues to cement her status as a force in stand-up comedy. Silverman also lent her voice as Vanellope in the Oscar-nominated smash hit Wreck-It Ralph and Golden Globe-nominated Ralph Breaks the Internet: Wreck-It Ralph 2. Silverman was nominated for a 2009 Primetime Emmy Award for “Outstanding Lead Actress in a Comedy Series” for portraying a fictionalized version of herself in her Comedy Central series The Sarah Silverman Program. In 2008, Silverman won a Primetime Emmy Award for “Outstanding Original Music and Lyrics” for her musical collaboration with Matt Damon.

Silverman grew up in New Hampshire and attended New York University for one year. In 1993 she joined Saturday Night Live as a writer and feature performer and has not stopped working since. She currently lives in Los Angeles.

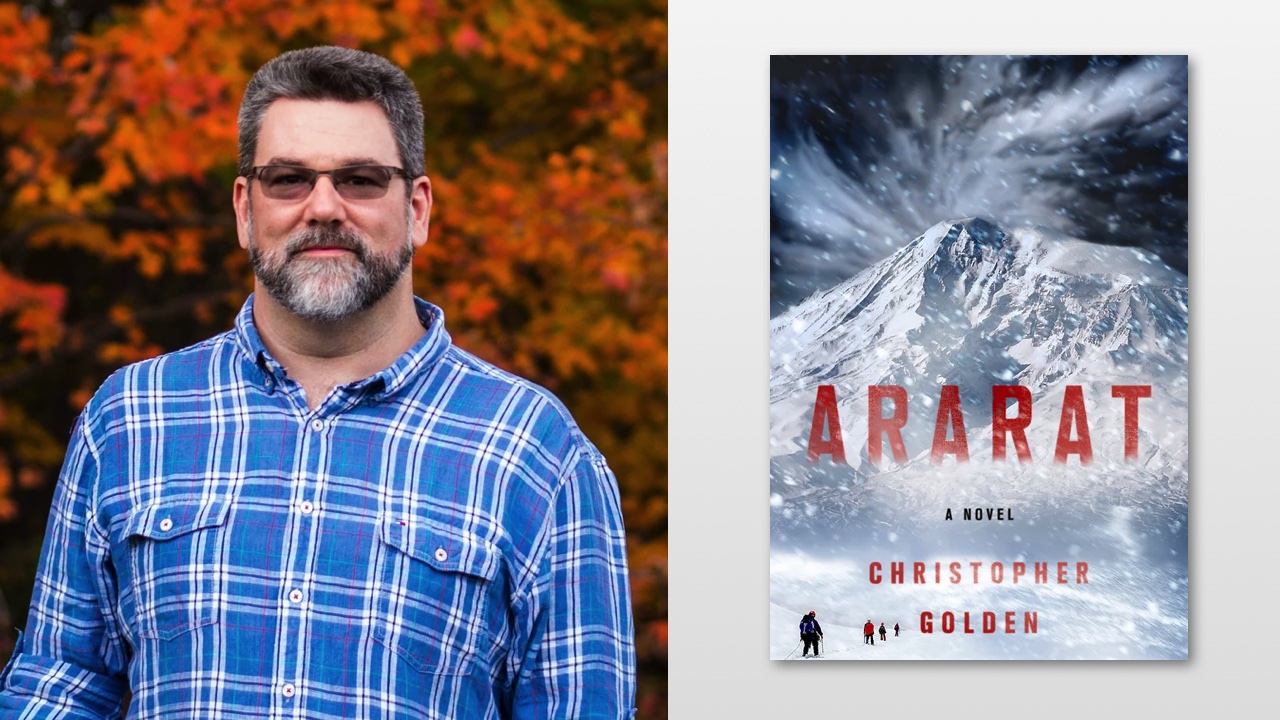

Christopher Golden

Christopher Golden is the New York Times bestselling, Bram Stoker Award-winning author of such novels as Road of Bones, Ararat, Snowblind, and Red Hands. With Mike Mignola, he is the co-creator of the Outerverse comic book universe, including such series as Baltimore, Joe Golem: Occult Detective, and Lady Baltimore. As an editor, he has worked on the short story anthologies Seize the Night, Dark Cities, and The New Dead, among others, and he has also written and co-written comic books, video games, screenplays, and a network television pilot. In 2015 he founded the popular Merrimack Valley Halloween Book Festival.

He was born and raised in Massachusetts, where he still lives with his family. His work has been nominated for the British Fantasy Award, the Eisner Award, and multiple Shirley Jackson Awards. For the Bram Stoker Awards, Golden has been nominated ten times in eight different categories, and won twice. His original novels have been published in more than fifteen languages in countries around the world.

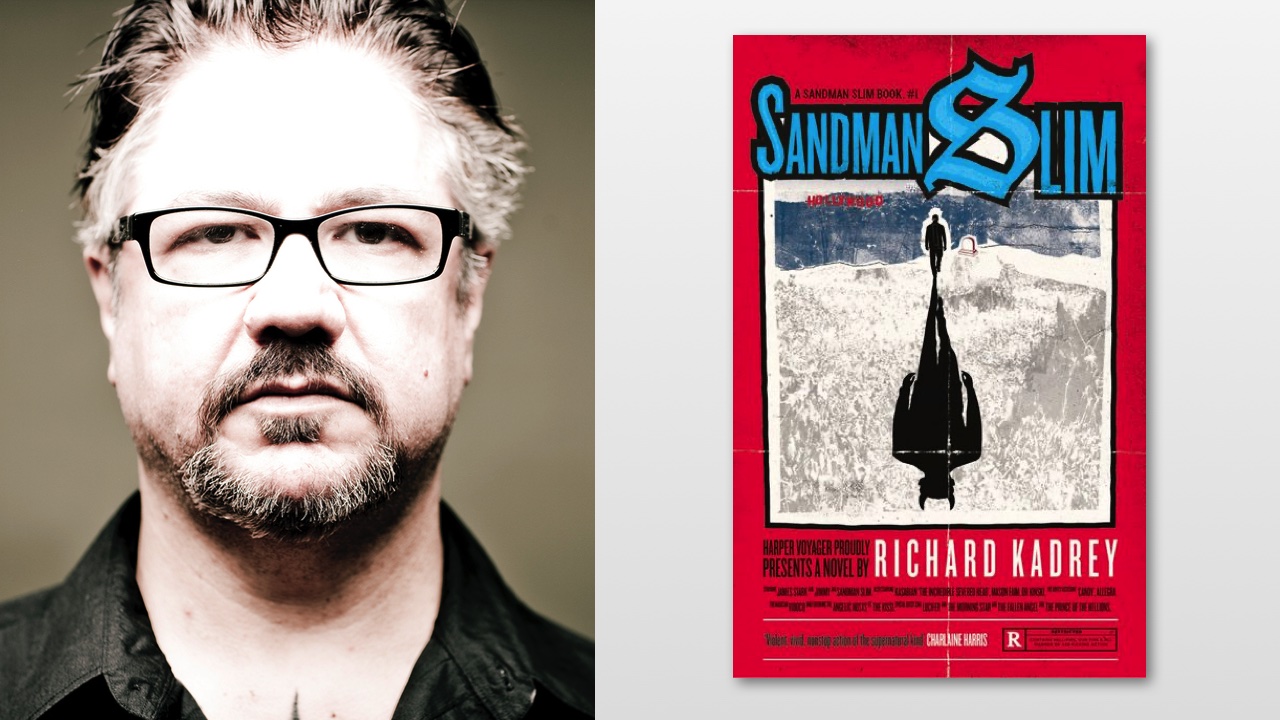

Richard Kadrey

Richard Kadrey is the New York Times bestselling author of the Sandman Slim supernatural noir series. Sandman Slim was included in Amazon’s “100 Science Fiction & Fantasy Books to Read in a Lifetime,” and is in development as a feature film. Some of Kadrey’s other books include King Bullet, The Grand Dark, Butcher Bird, and The Dead Take the A Train (with Cassandra Khaw). He’s written for film and comics, including Heavy Metal, Lucifer, and Hellblazer. Kadrey also makes music with his band, A Demon in Fun City.

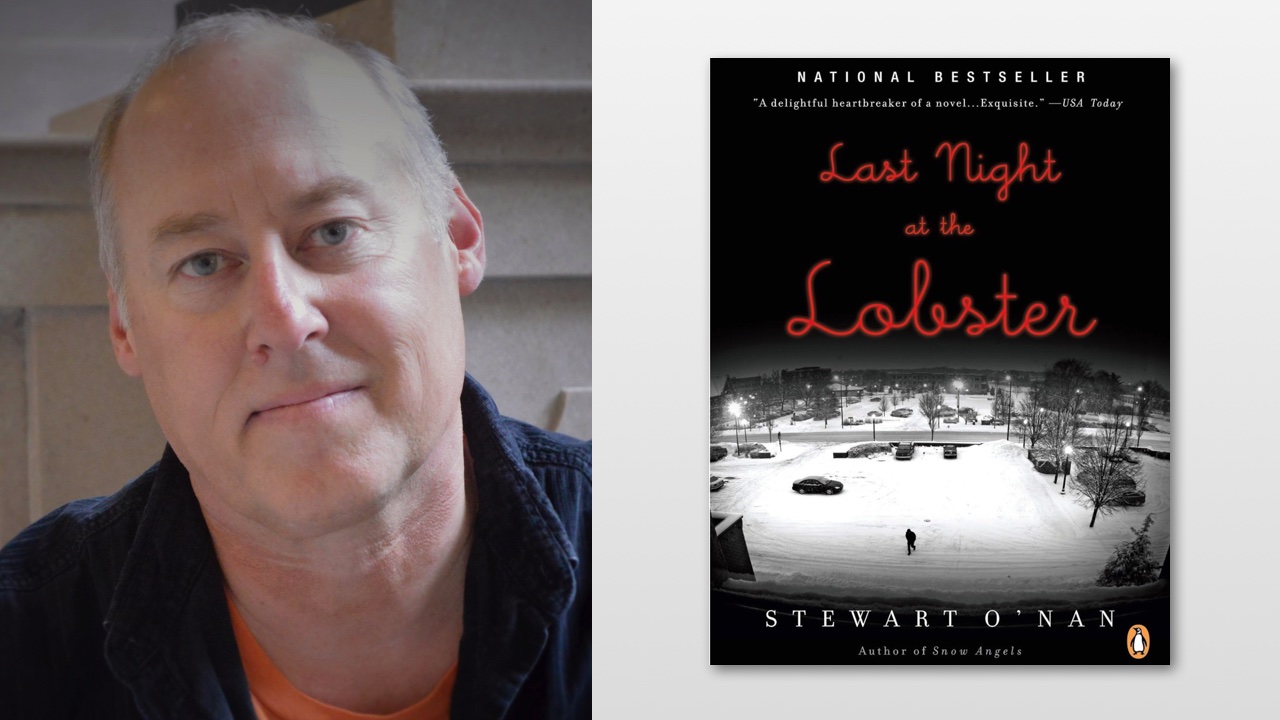

Stewart O’Nan

Stewart O’Nan’s award-winning fiction includes Snow Angels, A Prayer for the Dying, Last Night at the Lobster, and Emily, Alone. Granta named him one of America’s Best Young Novelists. He lives in Pittsburgh. (Photo: Beth Navarro)

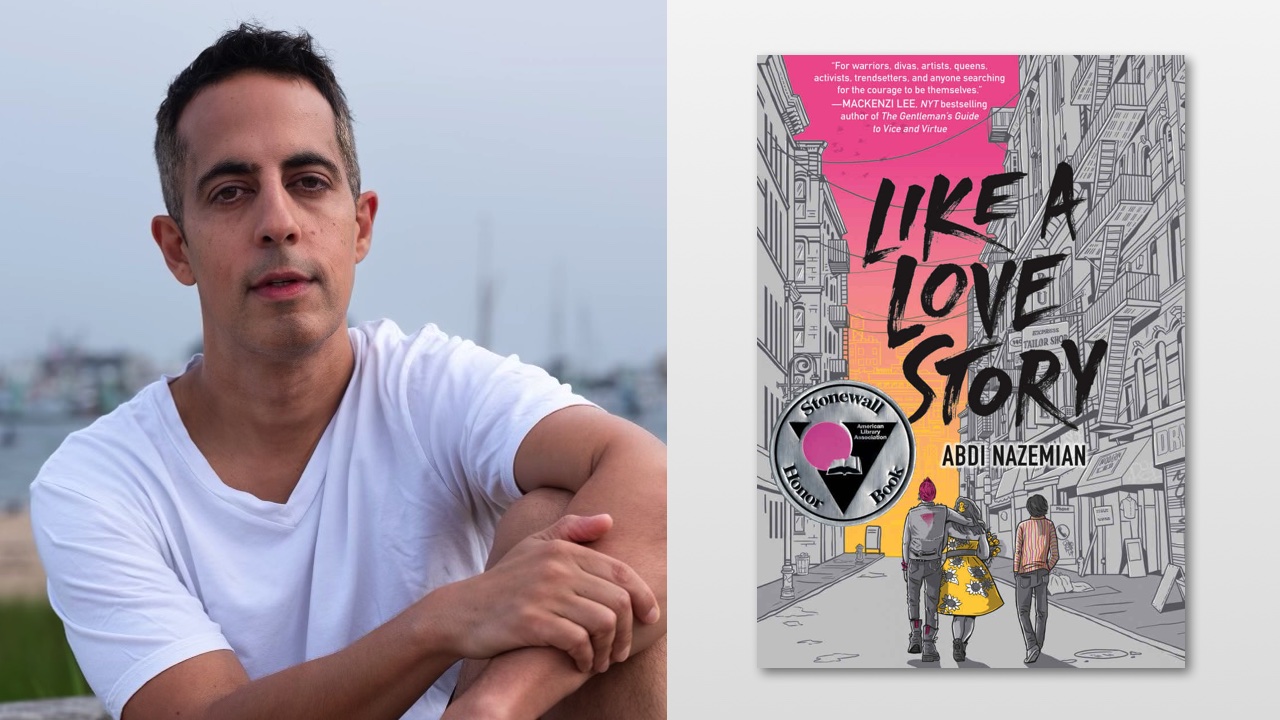

Abdi Nazemian

Abdi Nazemian is the author of Like a Love Story, a Stonewall Honor Book, Only This Beautiful Moment, The Chandler Legacies, and The Authentics. His novel The Walk-In Closet won the Lambda Literary Award for LGBT Debut Fiction. His screenwriting credits include the films The Artist’s Wife, The Quiet, and Menendez: Blood Brothers and the television series Ordinary Joe and The Village. He has been an executive producer and associate producer on numerous films, including Call Me by Your Name, Little Woods, and The House of Tomorrow. He lives in Los Angeles with his husband, their two children, and their dog, Disco. (Photo: Michelle Schapiro)

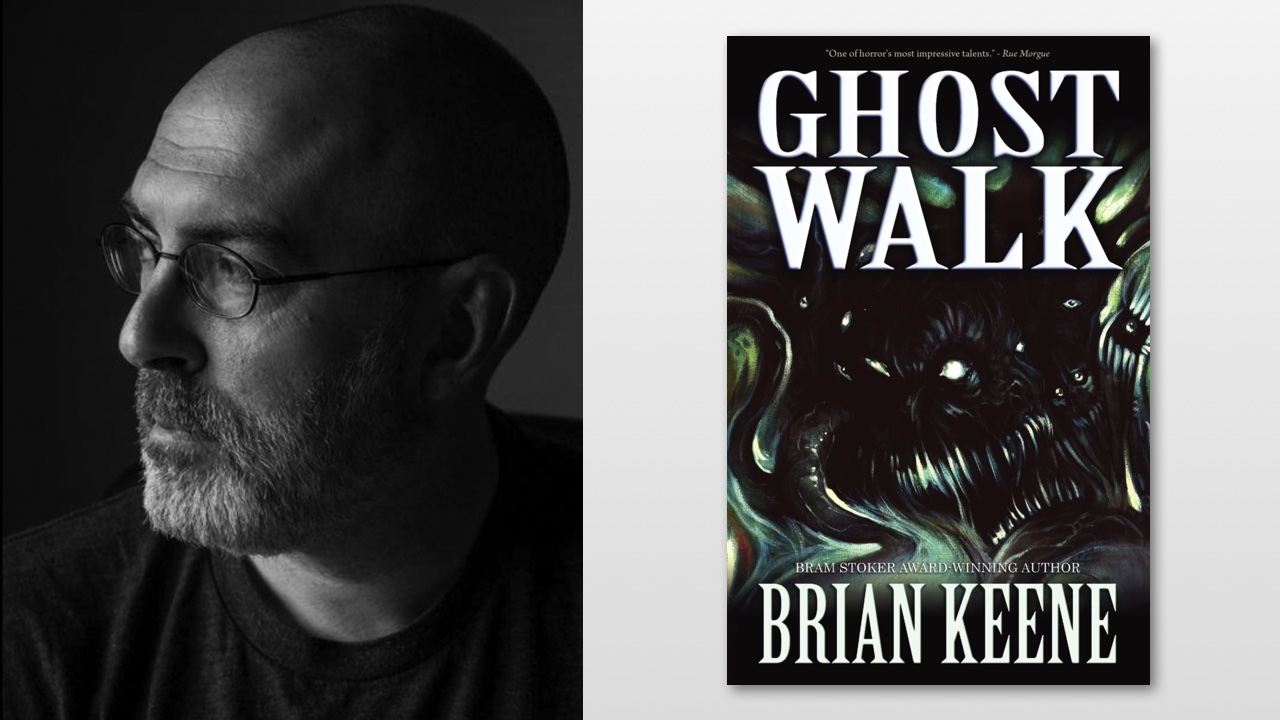

Brian Keene

Brian Keene is the author of over fifty books and three hundred short stories, mostly in the horror, crime, fantasy, and non-fiction genres, including Ghost Walk. His 2003 novel The Rising is credited with inspiring pop culture’s recurrent interest in zombies. He has also written for such media properties as Doctor Who, Thor, Aliens, Harley Quinn, The X-Files, Doom Patrol, Justice League, Hellboy, Superman, and Masters of the Universe. He was the showrunner for Realm Media and Blackbox TV’s Silverwood: The Door.

Several of Keene’s novels and stories have been adapted for film, including Ghoul, The Naughty List, The Ties That Bind, and Fast Zombies Suck. Keene also served as Executive Producer for the feature-length film I’m Dreaming of a White Doomsday.

From 2015 to 2020, he hosted the immensely popular The Horror Show with Brian Keene podcast. He also hosted (along with Christopher Golden) the long-running Defenders Dialogue podcast. Keene also serves on the board of Scares That Care, and as a trustee for the Horror Writers Association.

The father of two sons and stepfather to one daughter, Keene lives in Pennsylvania with his wife, author Mary SanGiovanni, and several cats. (Photo: John Urbancik)

Books as training data

Though an AI language model—often known as a large language model or LLM for short—is a software program, it’s not created the way most software programs are—that is, by human software engineers writing code.

Rather, an LLM is “trained” by copying massive amounts of text from various sources and feeding these copies into the model. (This corpus of input material is called the training dataset).

During training, the LLM copies each work in the training dataset and the copyrighted expression contained therein. The LLM progressively adjusts its output to more closely resemble the sequences of words copied from the training dataset. Once the LLM has copied and ingested all this text, it is able to emit convincing simulations of natural written language as it appears in the training dataset.

Much of the material in the training datasets used by the defendants comes from copyrighted works—including books written by Plaintiffs—that were copied and used for training without consent, without credit, and without compensation. Many of these books likely came from “shadow libraries”, websites that piratically distribute thousands of copyrighted books and publications.

Books in particular are recognized within the AI community as valuable training data. A team of researchers from MIT and Cornell recently studied the value of various kinds of textual material for machine learning. Books were placed in the top tier of training data that had “the strongest positive effects on downstream performance.” Books are also comparatively “much more abundant” than other sources, and contain the “longest, most readable” material with “meaningful, well-edited sentences”.

Right—because as usual, “generative artificial intelligence” is just human intelligence, repackaged and divorced from its creators.

And the grift doesn’t end at training. The project of steering AI systems toward something other than the worst version of humanity is known as alignment. So far, the best known technique is euphemistically called reinforcement learning from human feedback, which for OpenAI entails hiring low-wage foreign workers to spend hours with ChatGPT, nudging it away from toxic results.

The defendants

OpenAI

OpenAI, founded by Elon Musk and Sam Altman in 2015, is based in San Francisco. According to Altman, the two started OpenAI as a nonprofit venture “to develop a human positive AI … freely owned by the world.”

OpenAI’s status as a nonprofit was critical to its initial positioning. As Altman said then, “anything [OpenAI] develops will be available to everyone.” Why? Because they believed this approach was “the actual best thing for the future of humanity.”

The honeymoon would end. In 2018, Musk left OpenAI amidst disputes with Altman over its direction. In 2019, Altman reversed himself on OpenAI’s nonprofit purity and created a for-profit subsidiary. Later that year, OpenAI took a $1 billion investment from Microsoft. By January 2023, Microsoft had progressively increased its investment to $13 billion.

In February 2023, Musk sharply criticized OpenAI, saying that it “was created as an open source … non-profit company” but had “become a closed source, maximum-profit company effectively controlled by Microsoft.” By March 2023, Altman’s new “grand idea” was that “OpenAI will capture much of the world’s wealth”. How much? Altman suggested “$100 billion, $1 trillion, $100 trillion.” (The total United States money supply, according to the broadest measure (called M2), currently sits at roughly $20.8 trillion.) In March 2024, Musk sued OpenAI for breach of contract.

Meta

Meta is a maker of virtual-reality products, including Horizon Worlds. Meta also sells advertising on its websites Facebook, Instagram, and WhatsApp. In 2019, Meta (then known as Facebook) was fined $5 billion by the FTC for privacy violations arising from improper handling of personal data. More recently, Meta was fined €1.3 billion by the EU, also for privacy violations arising from improper handling of personal data.

Since 2013, Meta has operated an AI research lab, called Meta AI, founded by Mark Zuckerberg and Yann LeCun. Though in the past, Meta has used AI to spread fake news and hate speech, currently Meta AI is working on applying AI technology to selling advertising.

In February 2023, to compete with OpenAI’s ChatGPT system, Meta AI released a set of LLMs called LLaMA. Though Meta had intended to share LLaMA only with a select group of users, the models soon leaked to a public internet site. This led to an inquiry by the US Senate Subcommittee on Privacy, Technology, and the Law, which noted the “potential for [LLaMA’s] misuse in spam, fraud, malware, privacy violations, harassment, and other wrongdoing and harms.”

NVIDIA

NVIDIA is a technology company founded in 1993 that originally focused on computer-graphics hardware and has since expanded to other computationally intensive fields, including software and hardware for training and operating AI software programs. NVIDIA has trained a series of large language models called NeMo Megatron on a dataset called The Pile, which includes thousands of copyrighted books.

Databricks and MosaicML

Databricks is an AI services company in San Francisco. In July 2023, it acquired MosaicML, a maker of generative-AI tools. MosaicML has trained a series of large language models called MPT on a dataset called RedPajama, which includes thousands of copyrighted books.

Email updates

If you’d like to receive occasional email updates on the progress of the cases, please use the links below.

Contacting us

If you’re a member of the press or the public with other questions about this case or related topics, email llmlitigation@saverilawfirm.com. (Though please don’t send confidential or privileged information.)